W

WIn algebra, which is a broad division of mathematics, abstract algebra is the study of algebraic structures. Algebraic structures include groups, rings, fields, modules, vector spaces, lattices, and algebras. The term abstract algebra was coined in the early 20th century to distinguish this area of study from the other parts of algebra.

W

WIn mathematics, the additive inverse of a number a is the number that, when added to a, yields zero. This number is also known as the opposite (number), sign change, and negation. For a real number, it reverses its sign: the additive inverse of a positive number is negative, and the additive inverse of a negative number is positive. Zero is the additive inverse of itself.

W

WIn mathematics, a Cauchy sequence, named after Augustin-Louis Cauchy, is a sequence whose elements become arbitrarily close to each other as the sequence progresses. More precisely, given any small positive distance, all but a finite number of elements of the sequence are less than that given distance from each other.

W

WIn mathematics, a binary operation is commutative if changing the order of the operands does not change the result. It is a fundamental property of many binary operations, and many mathematical proofs depend on it. Most familiar as the name of the property that says "3 + 4 = 4 + 3" or "2 × 5 = 5 × 2", the property can also be used in more advanced settings. The name is needed because there are operations, such as division and subtraction, that do not have it ; such operations are not commutative, and so are referred to as noncommutative operations. The idea that simple operations, such as the multiplication and addition of numbers, are commutative was for many years implicitly assumed. Thus, this property was not named until the 19th century, when mathematics started to become formalized. A corresponding property exists for binary relations; a binary relation is said to be symmetric if the relation applies regardless of the order of its operands; for example, equality is symmetric as two equal mathematical objects are equal regardless of their order.

W

WIn mathematics, specifically set theory, the Cartesian product of two sets A and B, denoted A × B, is the set of all ordered pairs (a, b) where a is in A and b is in B. In terms of set-builder notation, that is

W

WIn physics and mathematics, the dimension of a mathematical space is informally defined as the minimum number of coordinates needed to specify any point within it. Thus a line has a dimension of one (1D) because only one coordinate is needed to specify a point on it – for example, the point at 5 on a number line. A surface such as a plane or the surface of a cylinder or sphere has a dimension of two (2D) because two coordinates are needed to specify a point on it – for example, both a latitude and longitude are required to locate a point on the surface of a sphere. The inside of a cube, a cylinder or a sphere is three-dimensional (3D) because three coordinates are needed to locate a point within these spaces.

W

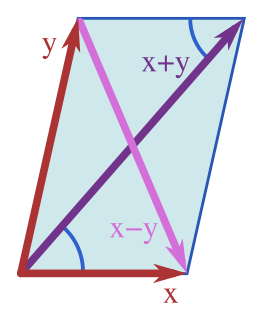

WIn mathematics, physics and engineering, a Euclidean vector is a geometric object that has magnitude and direction. Vectors can be added to other vectors according to vector algebra. A Euclidean vector is frequently represented by a ray, or graphically as an arrow connecting an initial point A with a terminal point B, and denoted by .

W

WIn mathematics, a field is a set on which addition, subtraction, multiplication, and division are defined and behave as the corresponding operations on rational and real numbers do. A field is thus a fundamental algebraic structure which is widely used in algebra, number theory, and many other areas of mathematics.

W

WIn mathematics, the general linear group of degree n is the set of n×n invertible matrices, together with the operation of ordinary matrix multiplication. This forms a group, because the product of two invertible matrices is again invertible, and the inverse of an invertible matrix is invertible, with identity matrix as the identity element of the group. The group is so named because the columns of an invertible matrix are linearly independent, hence the vectors/points they define are in general linear position, and matrices in the general linear group take points in general linear position to points in general linear position.

W

WIn mathematics and physics, the term generator or generating set may refer to any of a number of related concepts. The underlying concept in each case is that of a smaller set of objects, together with a set of operations that can be applied to it, that result in the creation of a larger collection of objects, called the generated set. The larger set is then said to be generated by the smaller set. It is commonly the case that the generating set has a simpler set of properties than the generated set, thus making it easier to discuss and examine. It is usually the case that properties of the generating set are in some way preserved by the act of generation; likewise, the properties of the generated set are often reflected in the generating set.

W

WIdempotence is the property of certain operations in mathematics and computer science whereby they can be applied multiple times without changing the result beyond the initial application. The concept of idempotence arises in a number of places in abstract algebra and functional programming.

W

WIn mathematics, an involution, or an involutory function, is a function f that is its own inverse,f(f ) = x

W

WIn the theory of vector spaces, a set of vectors is said to be linearly dependent if at least one of the vectors in the set can be defined as a linear combination of the others; if no vector in the set can be written in this way, then the vectors are said to be linearly independent. These concepts are central to the definition of dimension.

W

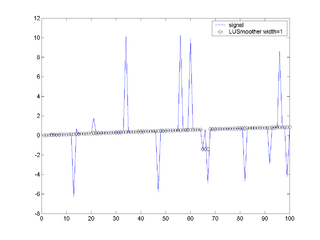

WIn signal processing, Lulu smoothing is a nonlinear mathematical technique for removing impulsive noise from a data sequence such as a time series. It is a nonlinear equivalent to taking a moving average of a time series, and is similar to other nonlinear smoothing techniques, such as Tukey or median smoothing.

W

WIn mathematics, a multiplicative inverse or reciprocal for a number x, denoted by 1/x or x−1, is a number which when multiplied by x yields the multiplicative identity, 1. The multiplicative inverse of a fraction a/b is b/a. For the multiplicative inverse of a real number, divide 1 by the number. For example, the reciprocal of 5 is one fifth, and the reciprocal of 0.25 is 1 divided by 0.25, or 4. The reciprocal function, the function f(x) that maps x to 1/x, is one of the simplest examples of a function which is its own inverse.

W

WIn mathematics, near sets are either spatially close or descriptively close. Spatially close sets have nonempty intersection. In other words, spatially close sets are not disjoint sets, since they always have at least one element in common. Descriptively close sets contain elements that have matching descriptions. Such sets can be either disjoint or non-disjoint sets. Spatially near sets are also descriptively near sets.

W

WIn mathematics, orthogonality is the generalization of the notion of perpendicularity to the linear algebra of bilinear forms. Two elements u and v of a vector space with bilinear form B are orthogonal when B(u, v) = 0. Depending on the bilinear form, the vector space may contain nonzero self-orthogonal vectors. In the case of function spaces, families of orthogonal functions are used to form a basis.

W

WThe parallel operator is a mathematical function which is used as a shorthand in electrical engineering, but is also used in kinetics, fluid mechanics and financial mathematics.

W

WIn linear algebra, a branch of mathematics, the polarization identity is any one of a family of formulas that express the inner product of two vectors in terms of the norm of a normed vector space. Equivalently, the polarization identity describes when a norm can be assumed to arise from an inner product. In that terminology:In a normed space, if the parallelogram law holds, then there is an inner product on V such that for all .

W

WIn linear algebra, the column space of a matrix A is the span of its column vectors. The column space of a matrix is the image or range of the corresponding matrix transformation.

W

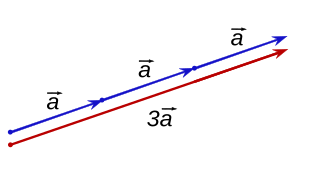

WIn mathematics, scalar multiplication is one of the basic operations defining a vector space in linear algebra. In common geometrical contexts, scalar multiplication of a real Euclidean vector by a positive real number multiplies the magnitude of the vector—without changing its direction. The term "scalar" itself derives from this usage: a scalar is that which scales vectors. Scalar multiplication is the multiplication of a vector by a scalar, and is to be distinguished from inner product of two vectors.

W

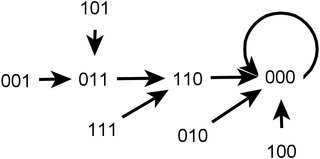

WSequential dynamical systems (SDSs) are a class of graph dynamical systems. They are discrete dynamical systems which generalize many aspects of for example classical cellular automata, and they provide a framework for studying asynchronous processes over graphs. The analysis of SDSs uses techniques from combinatorics, abstract algebra, graph theory, dynamical systems and probability theory.

W

WIn linear algebra, the transpose of a matrix is an operator which flips a matrix over its diagonal; that is, it switches the row and column indices of the matrix A by producing another matrix, often denoted by AT.