W

WA supercomputer is a computer with a high level of performance as compared to a general-purpose computer. The performance of a supercomputer is commonly measured in floating-point operations per second (FLOPS) instead of million instructions per second (MIPS). Since 2017, there are supercomputers which can perform over 1017 FLOPS (a hundred quadrillion FLOPS, 100 petaFLOPS or 100 PFLOPS). Since November 2017, all of the world's fastest 500 supercomputers run Linux-based operating systems. Additional research is being conducted in the United States, the European Union, Taiwan, Japan, and China to build faster, more powerful and technologically superior exascale supercomputers.

W

WThe TOP500 project ranks and details the 500 most powerful non-distributed computer systems in the world. The project was started in 1993 and publishes an updated list of the supercomputers twice a year. The first of these updates always coincides with the International Supercomputing Conference in June, and the second is presented at the ACM/IEEE Supercomputing Conference in November. The project aims to provide a reliable basis for tracking and detecting trends in high-performance computing and bases rankings on HPL, a portable implementation of the high-performance LINPACK benchmark written in Fortran for distributed-memory computers.

W

WA computer cluster is a set of loosely or tightly connected computers that work together so that, in many aspects, they can be viewed as a single system. Unlike grid computers, computer clusters have each node set to perform the same task, controlled and scheduled by software.

W

WApproaches to supercomputer architecture have taken dramatic turns since the earliest systems were introduced in the 1960s. Early supercomputer architectures pioneered by Seymour Cray relied on compact innovative designs and local parallelism to achieve superior computational peak performance. However, in time the demand for increased computational power ushered in the age of massively parallel systems.

W

WThe Amalka Supercomputing facility is the largest of the three Czech parallel supercomputers. It is used by Department of Space Physics, Institute of Atmospheric Physics, Academy of Sciences of the Czech Republic.

W

WAnton is a massively parallel supercomputer designed and built by D. E. Shaw Research in New York, first running in 2008. It is a special-purpose system for molecular dynamics (MD) simulations of proteins and other biological macromolecules. An Anton machine consists of a substantial number of application-specific integrated circuits (ASICs), interconnected by a specialized high-speed, three-dimensional torus network.

W

WBESM-6 was a Soviet electronic computer of the BESM series. It was the first Soviet second-generation computer, based on transistors.

W

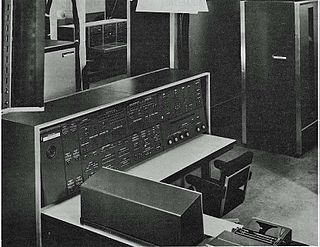

WThe CDC 6000 series was a family of mainframe computers manufactured by Control Data Corporation in the 1960s. It consisted of the CDC 6200, CDC 6300, CDC 6400, CDC 6500, CDC 6600 and CDC 6700 computers, which were all extremely rapid and efficient for their time. Each was a large, solid-state, general-purpose, digital computer that performed scientific and business data processing as well as multiprogramming, multiprocessing, Remote Job Entry, time-sharing, and data management tasks under the control of the operating system called SCOPE. By 1970 there also was a time-sharing oriented operating system named KRONOS. They were part of the first generation of supercomputers. The 6600 was the flagship of Control Data's 6000 series.

W

WThe CDC 6600 was the flagship of the 6000 series of mainframe computer systems manufactured by Control Data Corporation. Generally considered to be the first successful supercomputer, it outperformed the industry's prior recordholder, the IBM 7030 Stretch, by a factor of three. With performance of up to three megaFLOPS, the CDC 6600 was the world's fastest computer from 1964 to 1969, when it relinquished that status to its successor, the CDC 7600.

W

WThe CDC 7600 was the Seymour Cray-designed successor to the CDC 6600, extending Control Data's dominance of the supercomputer field into the 1970s. The 7600 ran at 36.4 MHz and had a 65 Kword primary memory using magnetic core and variable-size secondary memory. It was generally about ten times as fast as the CDC 6600 and could deliver about 10 MFLOPS on hand-compiled code, with a peak of 36 MFLOPS. In addition, in benchmark tests in early 1970 it was shown to be slightly faster than its IBM rival, the IBM System/360, Model 195. When the system was released in 1967, it sold for around $5 million in base configurations, and considerably more as options and features were added.

W

WThe CDC 8600 was the last of Seymour Cray's supercomputer designs while he worked for Control Data Corporation. As the natural successor to the CDC 6600 and CDC 7600, the 8600 was intended to be about 10 times as fast as the 7600, already the fastest computer on the market. The design was essentially four 7600's, packed into a very small chassis so they could run at higher clock speeds.

W

WThe CDC Cyber range of mainframe-class supercomputers were the primary products of Control Data Corporation (CDC) during the 1970s and 1980s. In their day, they were the computer architecture of choice for scientific and mathematically intensive computing. They were used for modeling fluid flow, material science stress analysis, electrochemical machining analysis, probabilistic analysis, energy and academic computing, radiation shielding modeling, and other applications. The lineup also included the Cyber 18 and Cyber 1000 minicomputers. Like their predecessor, the CDC 6600, they were unusual in using the ones' complement binary representation.

W

WChristofari is a supercomputer created by Sberbank of Russia and Nvidia. It was presented by Herman Gref, CEO of Sberbank, and David Rafalovsky, CTO of Sberbank Group, on 8 November 2019, at the AI Journey conference in Moscow.

W

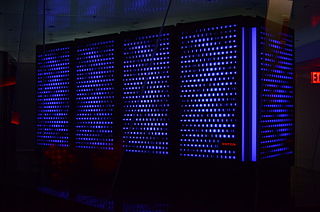

WA Connection Machine (CM) is a member of a series of massively parallel supercomputers that grew out of doctoral research on alternatives to the traditional von Neumann architecture of computers by Danny Hillis at Massachusetts Institute of Technology (MIT) in the early 1980s. Starting with CM-1, the machines were intended originally for applications in artificial intelligence (AI) and symbolic processing, but later versions found greater success in the field of computational science.

W

WControl Data Corporation (CDC) was a mainframe and supercomputer firm. CDC was one of the nine major United States computer companies through most of the 1960s; the others were IBM, Burroughs Corporation, DEC, NCR, General Electric, Honeywell, RCA, and UNIVAC. CDC was well-known and highly regarded throughout the industry at the time. For most of the 1960s, Seymour Cray worked at CDC and developed a series of machines that were the fastest computers in the world by far, until Cray left the company to found Cray Research (CRI) in the 1970s. After several years of losses in the early 1980s, in 1988 CDC started to leave the computer manufacturing business and sell the related parts of the company, a process that was completed in 1992 with the creation of Control Data Systems, Inc. The remaining businesses of CDC currently operate as Ceridian.

W

WThe Cray T3E was Cray Research's second-generation massively parallel supercomputer architecture, launched in late November 1995. The first T3E was installed at the Pittsburgh Supercomputing Center in 1996. Like the previous Cray T3D, it was a fully distributed memory machine using a 3D torus topology interconnection network. The T3E initially used the DEC Alpha 21164 (EV5) microprocessor and was designed to scale from 8 to 2,176 Processing Elements (PEs). Each PE had between 64 MB and 2 GB of DRAM and a 6-way interconnect router with a payload bandwidth of 480 MB/s in each direction. Unlike many other MPP systems, including the T3D, the T3E was fully self-hosted and ran the UNICOS/mk distributed operating system with a GigaRing I/O subsystem integrated into the torus for network, disk and tape I/O.

W

WThe CDC Cyber range of mainframe-class supercomputers were the primary products of Control Data Corporation (CDC) during the 1970s and 1980s. In their day, they were the computer architecture of choice for scientific and mathematically intensive computing. They were used for modeling fluid flow, material science stress analysis, electrochemical machining analysis, probabilistic analysis, energy and academic computing, radiation shielding modeling, and other applications. The lineup also included the Cyber 18 and Cyber 1000 minicomputers. Like their predecessor, the CDC 6600, they were unusual in using the ones' complement binary representation.

W

WThe Elbrus is a line of Soviet and Russian computer systems developed by Lebedev Institute of Precision Mechanics and Computer Engineering. These computers are used in the space program, nuclear weapons research, and defense systems, as well as for theoretical and researching purposes, such as an experimental Refal and CLU translators.

W

WFinisterrae was the 100th supercomputer in Top500 ranking in November 2007. Running at 12.97 teraFLOPS, it would rank at position 258 on the list as of June 2008. It is also the third most powerful supercomputer in Spain. It is located in Galicia.

W

WThe Finite Element Machine (FEM) was a late 1970s-early 1980s NASA project to build and evaluate the performance of a parallel computer for structural analysis. The FEM was completed and successfully tested at the NASA Langley Research Center in Hampton, Virginia. The motivation for FEM arose from the merger of two concepts: the finite element method of structural analysis and the introduction of relatively low-cost microprocessors.

W

WFROSTBURG was a Connection Machine 5 (CM-5) massively parallel supercomputer used by the US National Security Agency (NSA) to perform higher-level mathematical calculations. The CM-5 was built by the Thinking Machines Corporation, based in Cambridge, Massachusetts, at a cost of US$25 million. The system was installed at NSA in 1991, and operated until 1997. It was the first massively parallel processing computer bought by NSA, originally containing 256 processing nodes. The system was upgraded in 1993 with another 256 nodes, for a total of 512 nodes. The system had a total of 500 billion 32-bit words of storage, 2.5 billion words of memory, and could perform at a theoretical maximum 65.5 gigaFLOPS. The operating system CMost was based on Unix, but optimized for parallel processing.

W

WThe Goodyear Massively Parallel Processor (MPP) was a massively parallel processing supercomputer built by Goodyear Aerospace for the NASA Goddard Space Flight Center. It was designed to deliver enormous computational power at lower cost than other existing supercomputer architectures, by using thousands of simple processing elements, rather than one or a few highly complex CPUs. Development of the MPP began circa 1979; it was delivered in May 1983, and was in general use from 1985 until 1991.

W

WGoogle Data Centers are the large data center facilities Google uses to provide their services, which combine large drives, computer nodes organized in aisles of racks, internal and external networking, environmental controls, and operations software.

W

WgravitySimulator is a novel supercomputer that incorporates special-purpose GRAPE hardware to solve the gravitational n-body problem. It is housed in the Center for Computational Relativity and Gravitation (CCRG) at the Rochester Institute of Technology. It became operational in 2005.

W

WGyoukou is a supercomputer developed by ExaScaler and PEZY Computing, based around ExaScaler's ZettaScaler immersion cooling system.

W

WThe High Performance Computing Center (HLRS) in Stuttgart, Germany, is a research institute and a supercomputer center. HLRS has currently a flagship installation of a HPE Apollo 9000 system called Hawk 26 PFLOPS peak performance replacing the Cray XC40 system called Hazel Hen, providing ~7,4 PFLOPS peak performance. Additional systems include NEC clusters and Cray CS-Storm cluster.

W

WThe main credit to supercomputers goes to the inventor of CDC -6600, Seymour Cray. The history of supercomputing goes back to the early 1920s in the United States with the IBM tabulators at Columbia University and a series of computers at Control Data Corporation (CDC), designed by Seymour Cray to use innovative designs and parallelism to achieve superior computational peak performance. The CDC 6600, released in 1964, is generally considered the first supercomputer. However, some earlier computers were considered supercomputers for their day, such as the 1954 IBM NORC, the 1960 UNIVAC LARC, and the IBM 7030 Stretch and the Atlas, both in 1962.

W

WHPCx was a supercomputer located at the Daresbury Laboratory in Cheshire, England. The supercomputer was maintained by the HPCx Consortium, UoE HPCX Ltd, which was led by the University of Edinburgh: EPCC, with the Science and Technology Facilities Council and IBM. The project was funded by EPSRC.

W

WThe ILLIAC IV was the first massively parallel computer. The system was originally designed to have 256 64-bit floating point units (FPUs) and four central processing units (CPUs) able to process 1 billion operations per second. Due to budget constraints, only a single "quadrant" with 64 FPUs and a single CPU was built. Since the FPUs all had to process the same instruction – ADD, SUB etc. – in modern terminology the design would be considered to be single instruction, multiple data, or SIMD.

W

WThe Intel Paragon is a discontinued series of massively parallel supercomputers that was produced by Intel in the 1990s. The Paragon XP/S is a productized version of the experimental Touchstone Delta system that was built at Caltech, launched in 1992. The Paragon superseded Intel's earlier iPSC/860 system, to which it is closely related.

W

WKendall Square Research (KSR) was a supercomputer company headquartered originally in Kendall Square in Cambridge, Massachusetts in 1986, near Massachusetts Institute of Technology (MIT). It was co-founded by Steven Frank and Henry Burkhardt III, who had formerly helped found Data General and Encore Computer and was one of the original team that designed the PDP-8. KSR produced two models of supercomputer, the KSR1 and KSR2. It went bankrupt in 1994.

W

WMasPar Computer Corporation was a minisupercomputer vendor that was founded in 1987 by Jeff Kalb. The company was based in Sunnyvale, California.

W

WMeiko Scientific Ltd. was a British supercomputer company based in Bristol, founded by members of the design team working on the INMOS transputer microprocessor.

W

WThe Peloton supercomputer purchase is a program at the Lawrence Livermore National Laboratory intended to provide tera-FLOP computing capability using commodity Scalable Units (SUs). The Peloton RFP defines the system configurations.

W

WVladimir Mstislavovich Pentkovski was a Russian-American computer scientist, a graduate of the Moscow Institute of Physics and Technology, Ph. D., Doctor of Science, distinguished professor, winner of the highest former Soviet Union's USSR State Prize (1987), one of the leading architects of the Soviet Elbrus supercomputers and the high-level programming language El-76. At the beginning of 1990s, he immigrated to United States where he worked at Intel and led the team that developed the architecture for the Pentium III processor. According to a popular legend, Pentium processors were named after Vladimir Pentkovski.

W

WQuadrics was a supercomputer company formed in 1996 as a joint venture between Alenia Spazio and the technical team from Meiko Scientific. They produced hardware and software for clustering commodity computer systems into massively parallel systems. Their highpoint was in June 2003 when six out of the ten fastest supercomputers in the world were based on Quadrics' interconnect. They officially closed on June 29, 2009.

W

WSystem X was a supercomputer assembled by Virginia Tech's Advanced Research Computing facility in the summer of 2003. Costing US$5.2 million, it was originally composed of 1,100 Apple Power Mac G5 computers with dual 2.0 GHz processors. System X was decommissioned on May 21, 2012.

W

WUniva is a software company that develops workload management and cloud management products for compute-intensive applications in the data center and across public, private, and hybrid clouds.

W

WThe UNIVAC LARC, short for the Livermore Advanced Research Computer, is a mainframe computer designed to a requirement published by Edward Teller in order to run hydrodynamic simulations for nuclear weapon design. It was one of the earliest supercomputers.

W

WThe Minnesota Supercomputing Institute (MSI) in Minneapolis, Minnesota is a core research facility of the University of Minnesota that provides hardware and software resources, as well as technical user support, to faculty and researchers at the University and at other institutions of higher education in Minnesota. MSI is located in Walter Library, on the university's Twin Cities campus.